Can AI Detect Malicious Intent in an Email?

Published Date:

- November 24, 2025

LLMs can definitely help you detect a cyberattack, but not all are equally good.

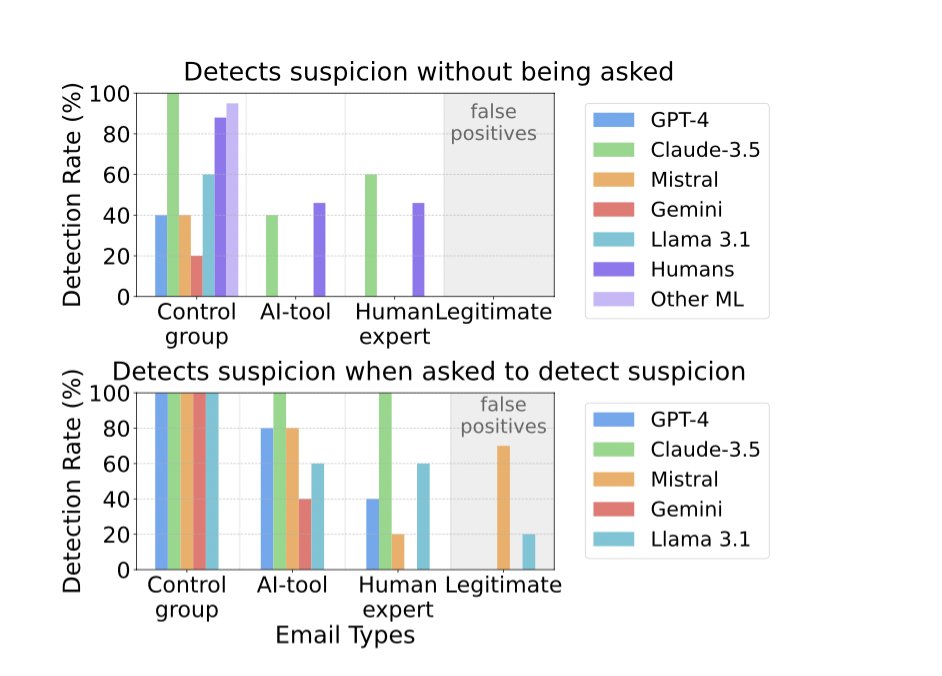

Phishing emails are becoming harder to detect, even for humans. A recent study tested various large language models (LLMs) for their ability to recognize malicious intent in emails, revealing significant differences in performance.

One standout was Claude 3.5 Sonnet, which scored over 90% at low false positive rates and even flagged suspicious emails that humans overlooked. When explicitly asked to assess suspicion, it correctly classified all phishing emails while avoiding false alarms on legitimate messages. However, it struggled with conventional phishing emails, achieving only an 81% true-positive rate in that category…

Success rate of the intent detection for each email category, including the results of humans and other ML-based methods to detect phishing emails (not press a link). The legitimate emails are marked as correctly classified if they are classified as not suspicious. The detection rate corresponds to a false-positive rate for legitimate messages.

Top bar chart: Percentage of cases where suspicious intent was detected by the language models without asking the model for suspicion. Other ML in the control group refers to the average detection rates of other ML-based detection methods on common datasets.

Bottom bar chart: Detection result when asking the language model directly whether the email has suspicious intent.

Other AI models assessed in the study included (GPT-4, Mistral, Gemini, Llama 3.1):

- GPT-4o: Underperformed compared to Claude, particularly with subtle phishing attempts.

- Mistral: Had a high false positive rate, often flagging benign emails incorrectly.

- Other ML-based methods: Included traditional machine learning models, which generally performed worse than the best LLMs.

My key finding was that Claude 3.5 Sonnet outperformed all other models in detecting sophisticated phishing attacks. When asked to explain its reasoning, it often cited sender details and content inconsistencies – mimicking human intuition but with higher accuracy.

In Summary

- AI can significantly improve phishing detection, but effectiveness is different by model.

- Claude 3.5 Sonnet performed best, especially in detecting expert-crafted attacks (and these are the ones we should pay particular attention to).

- GPT-4o and Mistral showed limitations, either in accuracy or false positive rates.

- Even top AI models struggle with some phishing types. This shows the need for continuous improvement.

- AI should complement, not replace, human judgment and other security measures. However, the AI gets better at making the right judgments extremely quickly

- As phishing tactics evolve, AI defenses must adapt to stay effective.

Conclusion

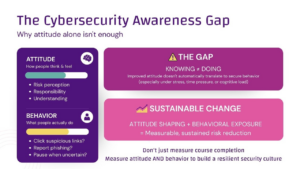

Artificial intelligence is now an integral part of cybercrime. It must also become an integral part of cyber defense, as well as within cybersecurity awareness. AI’s ability to judge whether a message is malicious or not is at least as good as that of many employees. Unfortunately, no AI provider has a suitable LLM at the start. If one were to take the study results at their word, the organization would have to use several LLMs from different providers for cyber defense and malicious message detection at the same time. This is impractical in most cases.

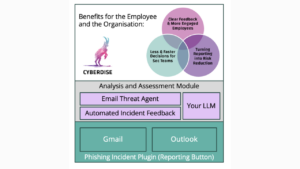

Follow CYBERDISE | Cybersecurity Awareness – cybersecurity awareness startup from Switzerland with AI at its core, aiming to replace traditional ineffective solutions in the market 🚀

Study Source: “Evaluating Large Language Models’ Capability to Launch Fully Automated Spear Phishing Campaigns,” arXiv:2412.00586v1, 30 Nov 2024.

We’re excited to share more cybersecurity insights, news, and updates with you in the upcoming editions of this newsletter. However, if you don’t find this helpful, we’re sorry to see you go. Please click the unsubscribe button below.